Performance Modelling of AI Inference on GPUs

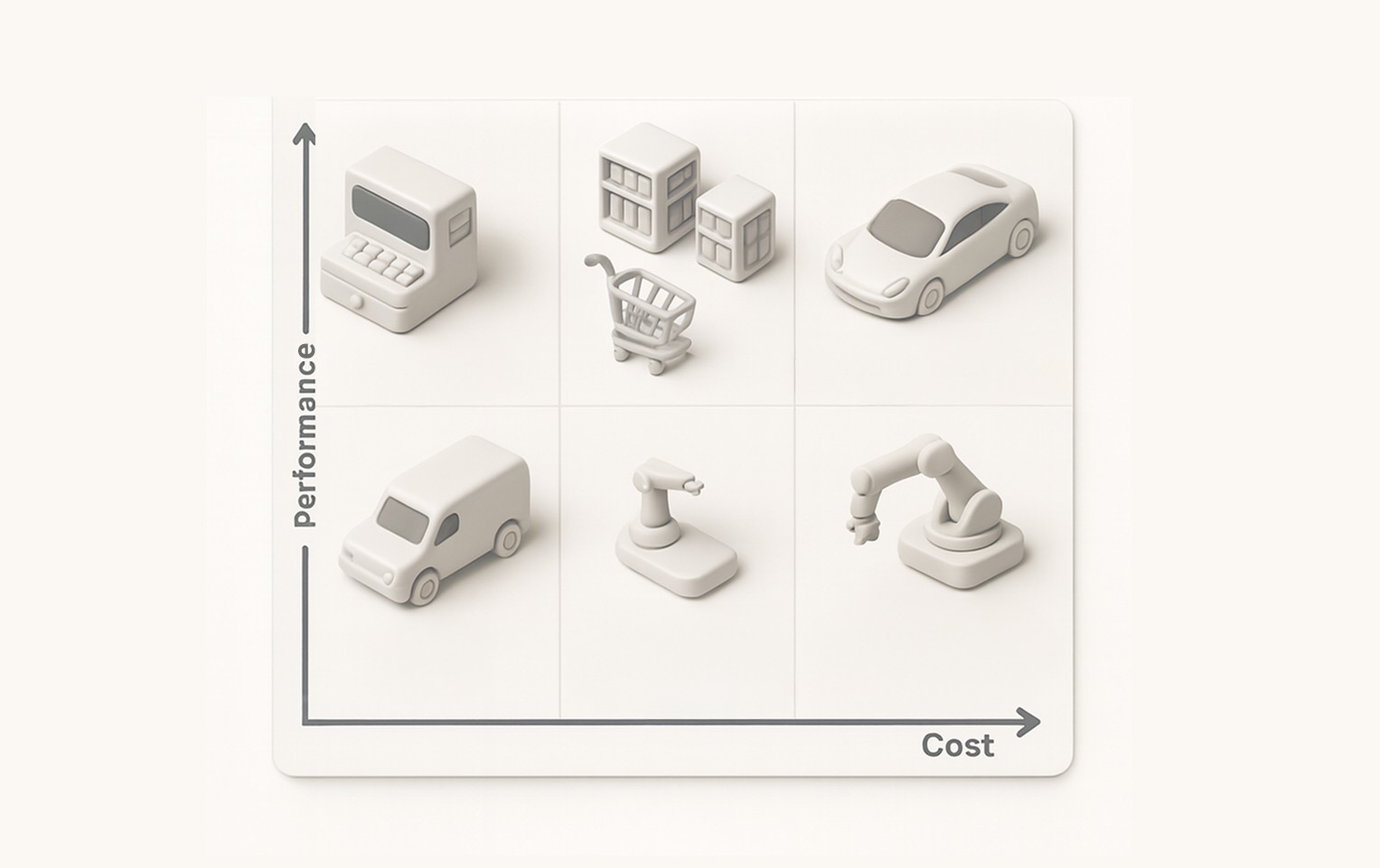

TechnoLynx helped a client reduce AI inference costs by modelling how common AI operations perform across different GPU architectures, so they could predict performance, choose the most cost-effective GPU for each task, and reduce running costs without sacrificing performance.

The Challenge

As the client’s AI models grew more complex, inference costs became a critical problem. They were running multiple models across a wide range of GPU architectures, and needed a reliable way to predict inference performance on different GPU topologies, so they could reduce running costs while keeping performance high.

Inference costs became a critical issue.

The client sought a way to reduce these costs by optimising their use of GPUs.

A wide range of GPU architectures to optimise.

The client was running multiple models across many GPU architectures, each with different strengths and weaknesses.

Some hardware features didn’t boost their AI tasks.

They wanted to understand which GPU features were essential for their work and which were unnecessary.

They needed a way to predict inference performance.

They wanted to predict the inference performance of various models on different GPU topologies to reduce running costs without sacrificing performance.

Project Timeline

From identifying critical inference operations to a predictive model and GPU measurement tooling

Problem Framing

Defined the optimisation goal: reduce inference costs by understanding how GPU topologies impact model performance across the client’s hardware set.

Focused on core AI operations (e.g., convolutions) and recreated them to study behaviour at a low level for reliable modelling.

Operation Analysis

Model Build

Built a predictive performance model using Python + OpenCL that accounts for parameters like clock speed, memory bandwidth, compute units, and parallel processing.

Developed a tool to measure characteristics of any OpenCL-capable GPU and benchmark task-specific performance with detailed feedback.

GPU Measurement Tooling

Knowledge Transfer

Delivered reports and workshops so the client’s team could make informed GPU decisions and apply the model in future projects.

The Solution

We built a performance modelling framework that predicts inference behaviour across GPU architectures and clarifies which hardware characteristics matter for specific AI workloads, supported by measurement tooling for OpenCL-capable GPUs.

A model that forecasts how different GPU topologies will perform for specific AI workloads, helping select the most cost-effective GPU per task.

Recreated core AI operations (e.g., convolutions) and modelled their execution behaviour to understand performance drivers across hardware.

A tool that measures characteristics of OpenCL-capable GPUs and benchmarks task performance using factors like clock speed, memory usage, and bandwidth.

Technical Specifications

The Outcome

The final result was a detailed performance model that helped the client predict how well their AI models would perform on different GPU architectures and provided low-level insights into how their graphics cards worked. The tools we developed helped measure the performance of their discrete GPUs, enabling informed decisions about which GPU to use for different types of tasks. By optimising GPU resources, the client reduced the amount of time and money spent on AI inference, and reports and workshops helped the development team gain a deeper understanding of how their GPUs worked.

Key Achievements

Predicted AI model inference performance across a wide range of GPU architectures

Built OpenCL-based tooling to measure GPU characteristics and task-specific performance

Improved decision-making on GPU selection by separating essential vs. unnecessary hardware features

Reduced the amount of time and money spent on AI inference and allowed resources to be reallocated to other areas of the business

Delivered reports and workshops so the client’s development team gained a deeper understanding of how their GPUs worked and could better utilise them in future projects

Want to Reduce AI Inference Costs?

Let’s discuss how performance modelling and GPU optimisation can help you run faster, spend less, and scale reliably across real-world workloads.