Generative AI has become a major part of artificial intelligence development. From creating text to producing images, these models now play a key role in many industries. Whether in image generation, chatbots, or content tools, they continue to gain popularity.

But one important process ensures these models perform well for specific tasks. That process is called fine-tuning. While generative AI models are trained on large, broad data sets, they need more adjustments to meet unique needs. This adjustment phase allows them to handle special cases better.

What Is Fine-Tuning in AI?

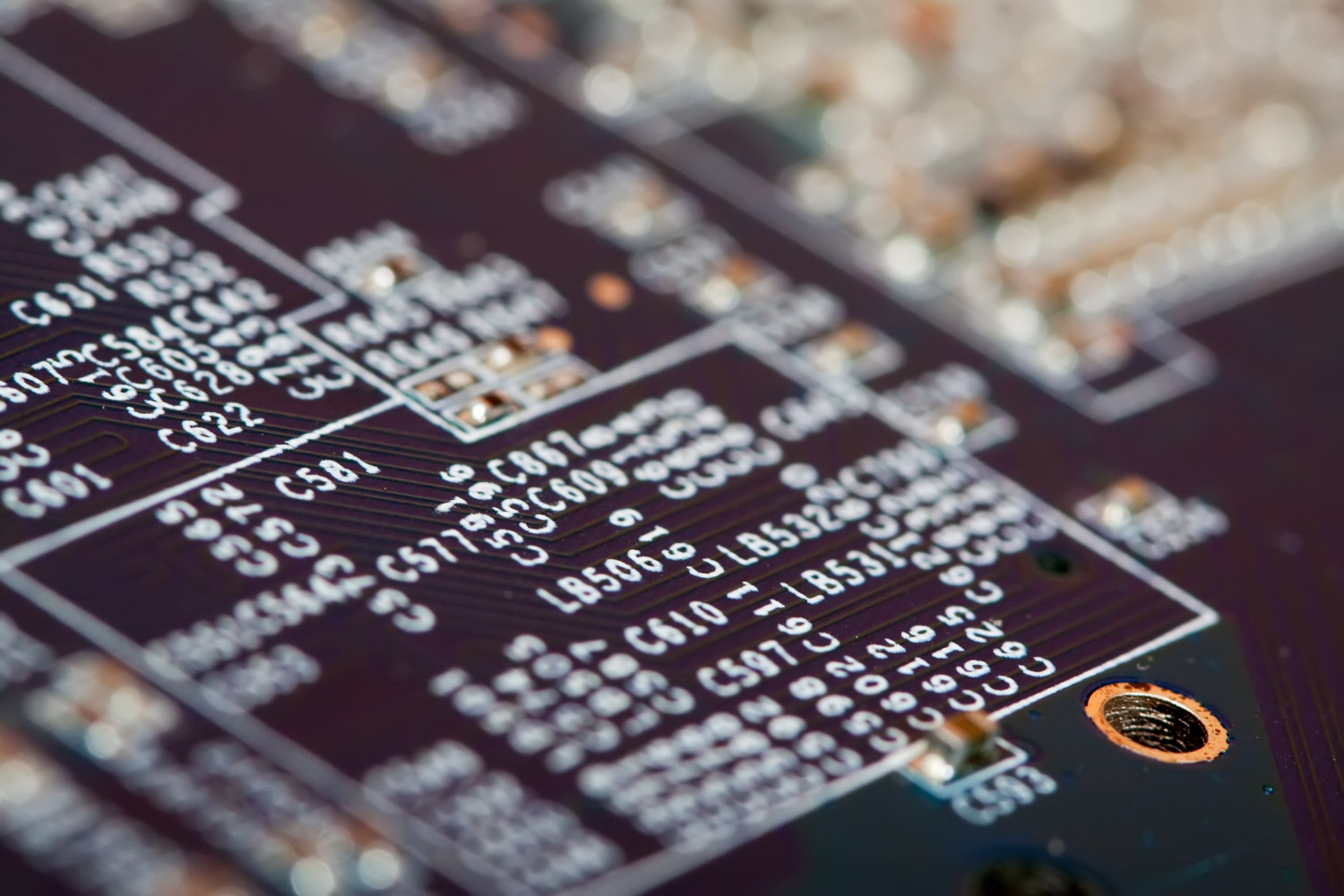

In simple terms, fine-tuning is the process where a pre-trained AI model learns new patterns from task-specific training data. This step is performed after the main training stage.

The initial training stage, where models are trained on general data, teaches them about common patterns. However, this broad knowledge is often too generic. Special applications require the model to adapt and produce better results.

At this point, the model goes through another round of learning. This additional step helps adjust the weights in its neural networks, making the model more focused and effective. As a result, it produces higher-quality and more relevant output.

Why General Models Are Not Enough

Large machine learning models trained on massive data sets can perform many tasks. Yet, they do not always meet every requirement. General knowledge is useful, but in some fields, precise and relevant information is essential.

For example, in natural language processing (NLP), a pre-trained chatbot may understand common language. However, it might not handle industry-specific queries well. Without task-specific learning, the chatbot’s replies can sound vague or incorrect.

In image generation, tools like stable diffusion create impressive results. Still, generating brand-specific visuals or detailed technical images often requires further adjustments. This makes additional learning necessary.

By adding this specialised step, the model becomes more reliable in narrow fields. It gains the ability to provide responses or images tailored to its users.

Read more: The Foundation of Generative AI: Neural Networks Explained

The Process of Adapting AI Models

Once the broad training stage is complete, the AI system still has room to improve. Developers prepare new, relevant training data and feed it into the existing model.

During this process, adjustments are made to make sure the AI responds in the way the user expects. The model becomes more skilled at handling domain-specific problems.

Unlike the first training phase, this adjustment is faster and more efficient. The AI system already knows general rules. Now, it only needs to learn how to apply them to a new task.

For example, an AI agent trained in general text writing can quickly adapt to writing legal contracts or healthcare reports. This reduces the time and computing power required compared to training a model from scratch.

Fine-Tuning in Large Language Models

Large language models (LLMs) such as GPT have billions of parameters. These parameters store what the model learnt during its main training. Although this gives the model broad abilities, they still require fine adjustments to perform specific roles.

Many businesses use LLMs for chatbots, customer support, or content writing. To make them fit these roles better, extra training with company-specific data is often necessary.

This process allows the models to understand brand language, comply with policies, and avoid inappropriate responses. It ensures the text they generate is suitable for its intended audience.

In summary, while models learn basic rules during initial training, task-specific learning makes them useful in real applications.

Read more: Markov Chains in Generative AI Explained

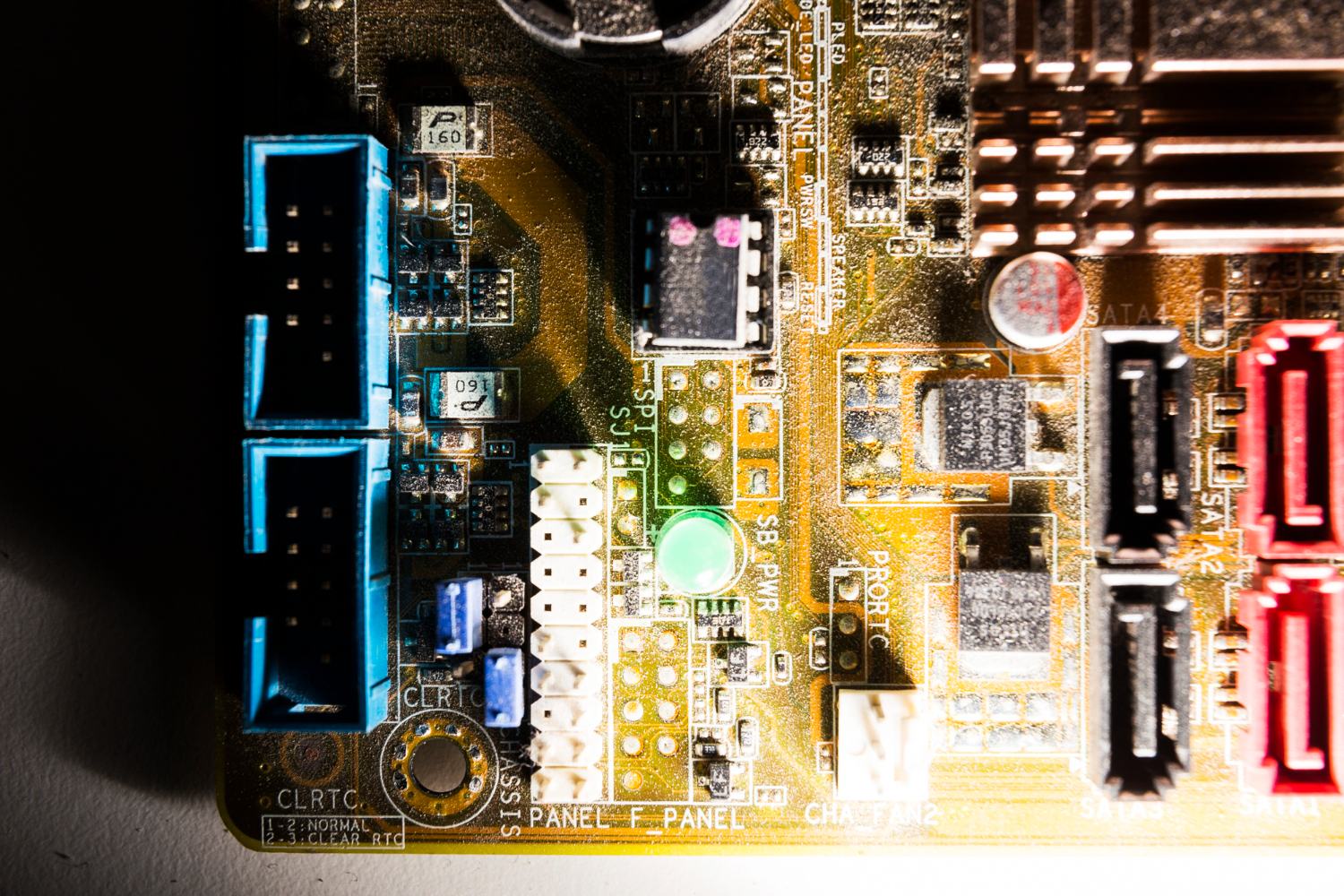

Adjustments for Image Generation

Image generation using generative AI also benefits from this process. Tools like Stable Diffusion are widely used to produce high-quality visuals. Still, they need to be adjusted to meet business needs.

For example, a car manufacturer may require the AI to produce images of their latest models in various environments. A general image generator may not understand the details needed. Adjustments help ensure the AI follows brand design rules and produces relevant pictures.

Additionally, fine adjustments help control the art style and maintain consistency across images. This is important for marketing, product listings, and social media posts.

Without this process, generated images may vary too much, failing to meet expectations. Adjusted models provide consistency and accuracy.

Read more: Control Image Generation with Stable Diffusion

Boosting Real-Time Performance

Some industries require AI to work in real time. Applications in finance, healthcare, and autonomous driving cannot afford delays or errors. Models must respond quickly and provide precise output.

In such cases, adjustments make AI systems more efficient. A general-purpose model may take more time to decide or may make mistakes. When tailored, the model produces faster and more accurate responses.

For example, in autonomous vehicles, recognising pedestrians, traffic signs, or road conditions must happen instantly. Adjusted AI models handle this better as they are trained for the exact task.

Simplifying AI Model Deployment

Customising pre-trained models has another advantage. It makes AI easier to deploy across industries. Businesses often do not need to build models from the ground up.

Instead, they use existing deep learning models and make adjustments. This cuts costs and saves time. It also reduces the demand for large amounts of computing power.

Since many companies deal with limited resources, using adjusted models offers a practical way to introduce AI. They get access to advanced AI applications without investing in expensive infrastructure.

Fine-Tuning Improves AI Agents

AI-driven assistants, or AI agents, are becoming more common. These agents help users with tasks like booking appointments, answering questions, or providing updates.

Generic agents may not perform well when given complex or domain-specific tasks. They may lack context or fail to understand detailed requests.

Through task-focused learning, these agents become smarter and more useful. They learn company rules, language preferences, and important domain knowledge.

This makes them more helpful and improves the overall user experience.

Read more: Agentic AI vs Generative AI: What Sets Them Apart?

Managing Large and Complex Models

With models trained on vast amounts of data, managing performance is important. When models grow to billions of parameters, keeping them efficient is difficult.

Applying adjustments helps control resource use and improve accuracy. Specialised learning ensures that the model does not waste resources processing irrelevant patterns.

Instead, it focuses only on the data and responses that matter to the task. This results in better performance and reduced operating costs.

Text-Based AI Benefits from Specialisation

Text generation is one of the most visible uses of generative AI. From writing blogs to summarising reports, AI-generated text saves time.

However, without adjustments, the output may not meet professional standards. Generic responses lack the depth or style many industries require.

Adding specialised training solves this problem. AI systems become capable of producing detailed and accurate documents. Whether for healthcare, law, or education, task-focused AI meets the demands of each field.

Supporting Diverse AI Applications

Generative AI models now support countless industries. From autonomous vehicles to marketing, they produce useful results. Still, each use case has different requirements.

For example, satellite companies need AI to classify satellite images. Marketing teams need AI to write catchy headlines. Without adjustments, one model cannot handle all these jobs equally well.

By applying tailored learning, each application benefits. The AI produces relevant, high-quality output that meets specific business goals.

Read more: Symbolic AI vs Generative AI: How They Shape Technology

Future Trends and Opportunities in Fine-Tuning

The world of generative ai is growing fast. With demand rising, new methods for improving models continue to appear. One area that will see big changes is the way fine adjustments are applied.

Traditional fine-tuning often requires large amounts of time and resources. Training data must be prepared carefully. The process also needs powerful hardware to deal with billions of parameters in large language models llms. This makes it harder for smaller teams or businesses to make full use of these models.

However, new ideas are starting to change this. One approach involves low-rank adaptation (LoRA) methods. These make it possible to adjust models without changing all their internal weights.

Instead, small updates are added on top of the existing structure. This reduces the need for huge computing power. It also makes fine adjustments faster and more cost-effective.

Another growing trend is task-specific adapters. These act as small modules within the main ai model. Each adapter is trained for a different job.

This allows one big model to serve many industries without full retraining. Users can switch between adapters depending on their needs.

There is also interest in on-device fine-tuning. Today, most adjustments happen in the cloud. This demands strong internet connections and central servers.

In the future, smaller models and better optimisation may allow personal devices to handle updates locally. This could mean smarter phones or laptops that adapt to each user’s preferences without sending data elsewhere.

In addition, improvements in synthetic data generation offer more options. Creating new, artificial data sets using generative adversarial network gan methods helps solve the problem of limited real-world examples. By generating high-quality, fake data, AI systems can learn new tasks even when labelled data is scarce.

As AI advances, fine adjustments will likely become easier, cheaper, and more flexible. This will open up new opportunities for smaller companies and individuals to make the most of deep learning models.

TechnoLynx stays ready for these changes. We work with the latest methods to help clients get the best from AI. Our team keeps up with trends like modular adapters and efficient training. This means your business will always be ready for the next step in AI.

Read more: Generative AI Models: How They Work and Why They Matter

Conclusion

The role of fine adjustments in AI is essential. Without them, generative AI models remain too general. By learning task-specific patterns, these systems become valuable tools.

They produce better text, images, and decisions across industries. This process reduces errors, improves efficiency, and ensures consistency.

Whether for large language models (LLMs), stable diffusion image generators, or AI agents, applying specialised learning makes AI practical and useful in the real world.

At TechnoLynx, we help businesses make the most of AI. Our team designs and refines solutions tailored to your industry. Whether you need high-quality text generation, accurate image production, or domain-specific AI models, we can support you. Contact TechnoLynx today to bring advanced, reliable AI into your business operations.

Image credits: Freepik